Tversky Neural Networks

created: Aug. 16, 2025, 4:59 p.m. | updated: Aug. 17, 2025, 6:44 a.m.

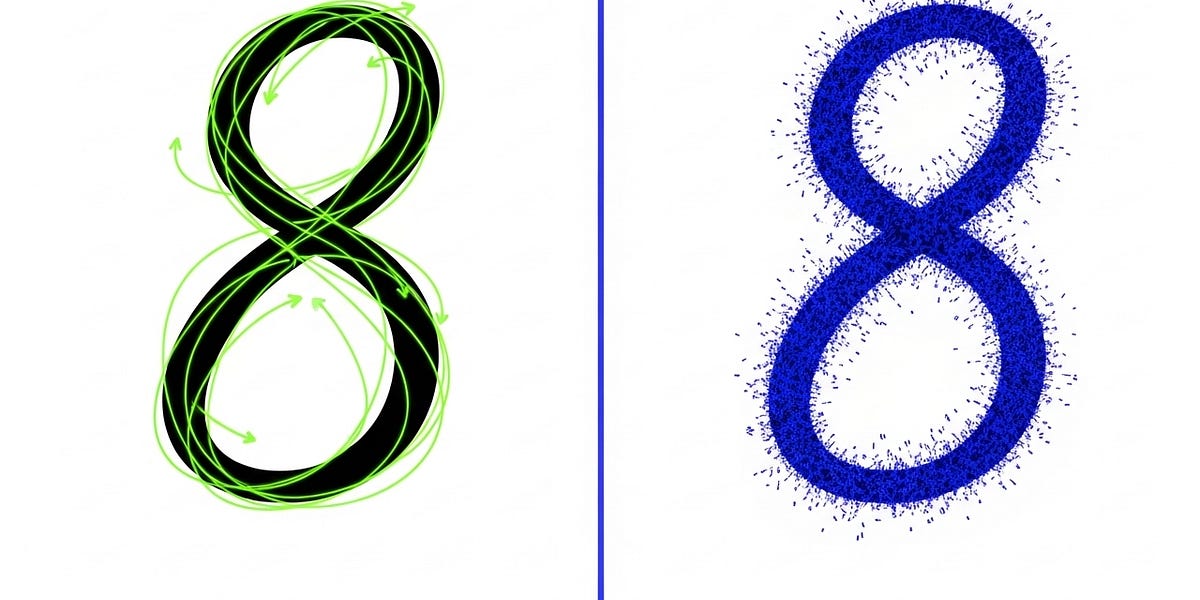

Differentiable Tversky similarityThe key innovation is a differentiable parameterization of Tversky similarity.

Next, Tversky neural networks are defined based on two new building blocks:Tversky Similarity Layer , analogous to metric similarity functions like dot product or cosine similarity.

However, instead of simple scalar product, it uses a more complex and expressive nonlinear Tversky similarity function in the Tversky Similarity Layer.

In total, the learnable parameters in Tversky neural networks include:prototype vectors, Πfeature vectors, Ωweights α, β, θΠ and Ω can be shared between different layers.

And the learnable prototype and feature vectors in Tversky networks still need to be learned; not every initialization succeeded in the experiments.

14 hours, 9 minutes ago: Hacker News